SCAI Question 9

Mitigating Catastrophic Risks & Ongoing Harms

How can we mitigate the catastrophic risks and ongoing harms arising from AI, recognising that there are diverse opinions on the severity, probability, time sensitivity, and recoverability of these risks and harms?

Context & Assumptions

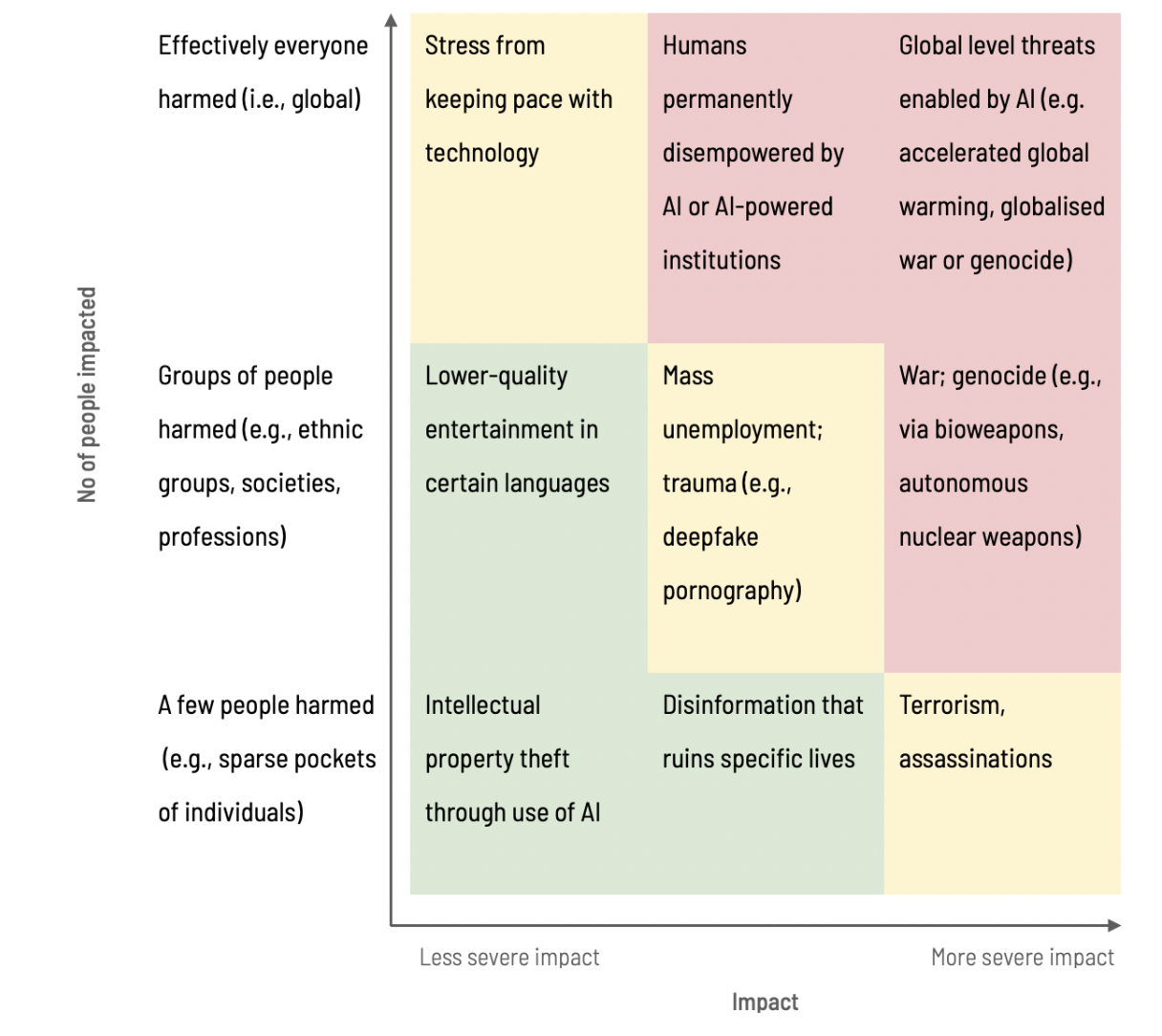

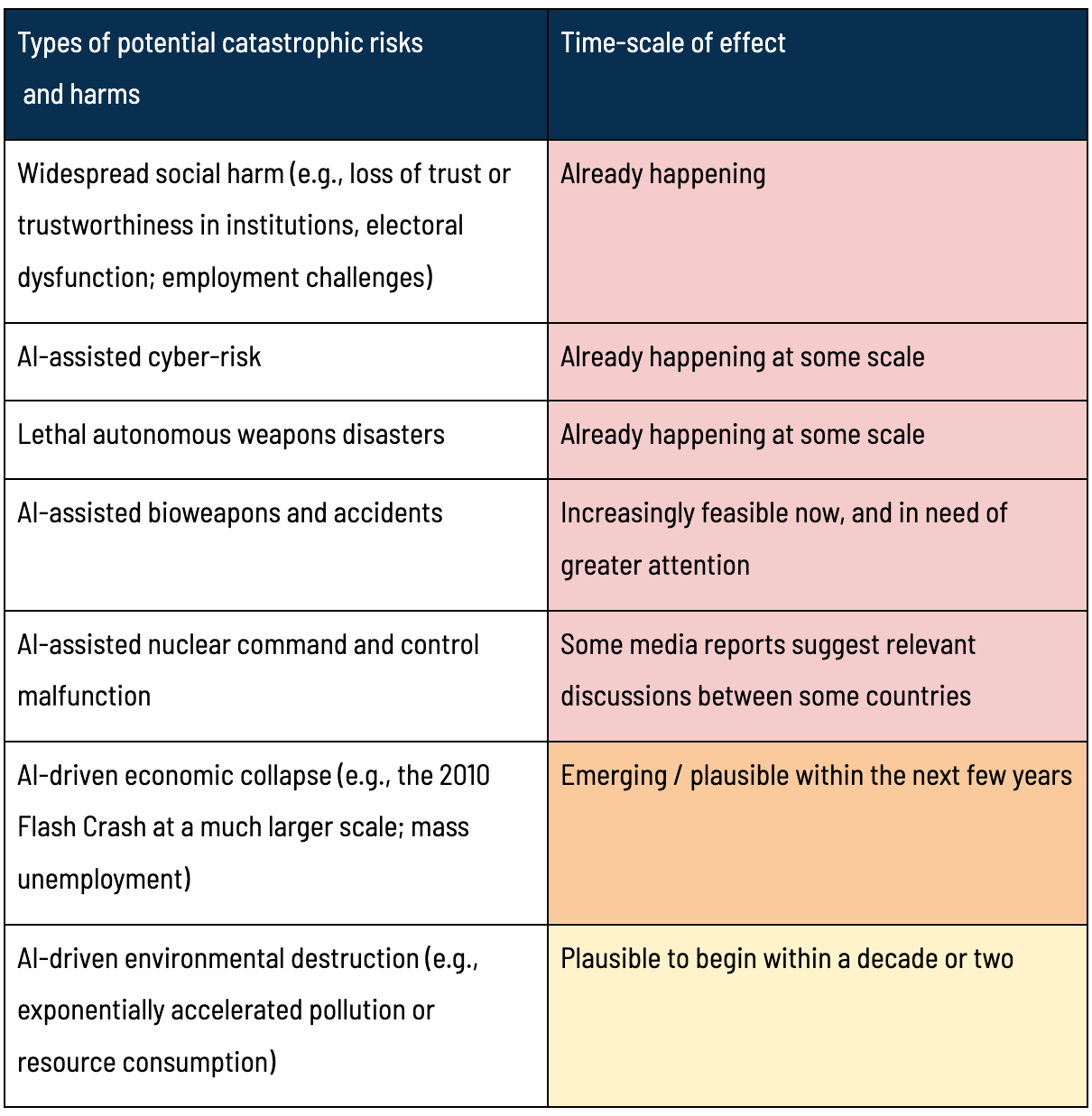

We recognise there is a range of views on what are the risks and harms that can arise, and their severity, probability, time sensitivity, and recoverability. For instance, here are some potential risks and our estimates on their time scales:

Risks and harms from AI can arise from various sources. They may occur by accident, intentionally, or due to willful indifference by the different stakeholders. They may also occur at the systemic level where no party is responsible or accountable when such risks and harms happen (e.g., widespread irreversible addiction to a technology that no one entity in particular is responsible for developing).

An assumption behind this question is that there may be warning signs, and it would be valuable to actively look for them. Catastrophic harms can also result even without Artificial General Intelligence (AGI) since there are AI capabilities in narrow domains that could already lead to societal-scale catastrophic risks.

Question

How can we mitigate the catastrophic risks and ongoing harms arising from AI, recognising that there are diverse opinions on the severity, probability, time sensitivity and recoverability of these risks and harms?

If we are to understand this, we will also need a way to discuss and identify which risks and harms are considered catastrophic and deserving of more attention. For each such risk, we will need to answer the following questions: what are its warning signs (if possible)? Who should be entrusted to monitor for those signs? On what time scale might it happen? Who decides if the risk is worth taking, and how? And if we fail to avoid it completely, how can we mitigate its effects and recover from it, and at what cost?

Indicators of Progress

To avoid catastrophic harms from increasingly advanced AI, we should establish clear warning signs and thresholds in advance across multiple areas. These include indicators and thresholds pertaining to computing power, demonstrations of dangerous AI abilities, job loss, lawsuits, expert testimonies from diverse disciplines, and proliferation of fake content, impersonations, and cyberattacks.

Comprehensive safety evaluation infrastructure and standards should be developed for stress testing mission critical systems before full deployment. Benchmarks and standards should be developed for a range of technical and social considerations. Best practices such as red-teaming should also be established and scaled up.

Robust oversight mechanisms are also needed, involving consultation with diverse experts as well as representatives of potential victim groups. Audits should be independent without conflicts of interest. And given the global impact possible, international oversight mechanisms may be warranted for the most powerful AI systems.

By defining indicators and responses in a systematic way ahead of time, we can monitor progress and risk to make proactive governance decisions before harms arise. The goal is to avoid the “boiling frog” by reacting only when problems become dire and harder to address.

Potential challenges arise in achieving consensus on what constitutes a “catastrophe”, assessing the likelihood of various catastrophic risks, and determining how far off they are on the horizon. As such, this complexity necessitates a range of approaches, demanding more people and resources than would be required to mitigate a single type of catastrophic risk.